Sample script to connect to oracle database

Here is the sample script to connect to oracle database and get value.

sqllog=/tmp/sqllog.dat

spoolout=/tmp/spool.out

sqlplus -s /nolog <<EOF > $sqllog

set term off

set head off

set linesize 300

set feedback off

set trimspool on

set serveroutput on

whenever oserror exit

whenever sqlerror exit sql.sqlcode

connect OraUser/OraPassword@OraName

spool $spoolout

select * from dual;

spool off

exit

EOF

rc=$?

if [[ $rc != 0 ]];then

echo “unable to connect to oracle database”

fi

Filter Stage

Filter Stage:

Filter stage is a processing stage used to filter database based on filter condition.

The filter stage is configured by creating expression in the where clause.

Scenario1:Check for empty values in the customer name field.We are reading from sequential file and hence we should check for empty value instead of null.

Scenario 2:Comparing incoming fields.check transaction date falls between strt_dt and end_dt and filter those records.

Input Data:

| CUSTOMER_ID | CUSTOMER_NAME | TRANSACTION_DT | STR_DT | END_DT |

| 1 | UMA | 1/1/2010 | 5/20/2010 | 12/20/2010 |

| 1 | UMA | 5/28/2011 | 5/20/2010 | 12/20/2010 |

Output:

| CUSTOMER_ID | CUSTOMER_NAME | TRANSACTION_DT | STR_DT | END_DT |

| 1 | UMA | 5/28/2011 | 5/20/2010 | 12/20/2010 |

Reject:

| CUSTOMER_ID | CUSTOMER_NAME | TRANSACTION_DT | STR_DT | END_DT |

| 1 | UMA | 1/1/2010 | 5/20/2010 | 12/20/2010 |

Partition data based on CUSTOMER_ID to make sure all rows with same key values process on the same node.

Condition : where TRANSACTION_DT Between STRT_DT and END_DT

Actual Output:

Actual Reject Data:

Scenario 3:Evaluating input column data

Scenario 3:Evaluating input column data

ex:Where CUSTOMER_NAME=’UMA’ AND CUSTOMER_ID=’1′

Output :

Funnel Stage

Funnel Stage:

Funnel stage is used to combine multiple input datasets into a single input dataset.This stage can have any number of input links and single output link.

It operates in 3 modes:

Continuous Funnel combines records as they arrive (i.e. no particular order);

Continuous Funnel combines records as they arrive (i.e. no particular order);

Sort Funnel combines the input records in the order defined by one or more key fields;

Sequence copies all records from the first input data set to the output data set, then all the records from the second input data set, etc.

Note:Metadata for all inputs must be identical.

Sort funnel requires data must be sorted and partitioned by the same key columns as to be used by the funnel operation.

Hash Partition guarantees that all records with same key column values are located in the same partition and are processed in the same node.

Go to the properties of the funnel stage page and set Funnel Type to continuous funnel.

Note:In order to use sequence funnel you need to specify which order the input links you need to process and also make sure the stage runs in sequential mode.

Usually we use sequence funnel when we create a file with header,detail and trailer records.

3)Sort Funnel:

Note: If you are running your sort funnel stage in parallel, you should be aware of the various

Note: If you are running your sort funnel stage in parallel, you should be aware of the various

considerations about sorting data and partitions

Thats all about funnel stage usage in datastage.

Datastage External Filter Stage

External Filter stage:

External filter stage is a processing stage allows you to specify a UNIX command that acts as a filter on the data we are processing.

An example is use a grep command for a certain string or pattern and discards records which did not contain match.

Input Data:

| CUSTOMER_ID | CUSTOMER_NAME |

| 1 | UMA |

| 2 | KATRU |

| 3 | POOJI |

Now i want to use grep command to filter input data which contains “UMA” in the customer_name field.

Command : grep -w “UMA”

Interesting i found one issue with stage also.I have added a parameter DBPasswd as encrypted in the parameters page and used cat command in the filter stage and it is displaying the password.

In the log i found that it is decrypting the passwd like below.

In the log i found that it is decrypting the passwd like below.

stg_External_Filter,0: Wrapped Unix command ‘cat dsblog’ terminated with error, exit code 1.stg_External_Filter.

I don’t know whether it is datastage bug or it is the way the stage is behaving.

Thanks

Merge Stage

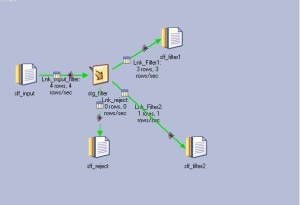

Merge Stage:

The Merge stage is a processing stage. It can have any number of input links, a single output link, and the same number of reject links as there are update input links.(according to DS documentation)

Merge stage combines a mster dataset with one or more update datasets based on the key columns.the output record contains all the columns from master record plus any additional columns from each update record that are required.

A master record and update record will be merged only if both have same key column values.

The data sets input to the Merge stage must be key partitioned and sorted. This ensures that rows with the same key column values are located in the same partition and will be processed by the same node. It also minimizes memory requirements because fewer rows need to be in memory at any one time.

As part of preprocessing your data for the Merge stage, you should also remove duplicate records from the master data set. If you have more than one update data set, you must remove duplicate records from the update data sets as well.

Unlike Join stages and Lookup stages, the Merge stage allows you to specify several reject links. You can route update link rows that fail to match a master row down a reject link that is specific for that link. You must have the same number of reject links as you have update links. The Link Ordering tab on the Stage page lets you specify which update links send rejected rows to which reject links. You can also specify whether to drop unmatched master rows, or output them on the output data link.

Example :

| Master dataset: | |

| CUSTOMER_ID | CUSTOMER_NAME |

| 1 | UMA |

| 2 | POOJITHA |

| Update dataset1 | |||

| CUSTOMER_ID | CITY | ZIP_CODE | SEX |

| 1 | CYPRESS | 90630 | M |

| 2 | CYPRESS | 90630 | F |

| Output: | ||||

| CUSTOMER_ID | CUSTOMER_NAME | CITY | ZIP_CODE | SEX |

| 1 | UMA | CYPRESS | 90630 | M |

| 2 | POOJITHA | CYPRESS | 90630 | F |

Merge stage configuration steps:

Unmatched Masters Mode:Keep means that unmatched rows (those without any updates) from the master link are output; Drop means that unmatched rows are dropped instead.

Warn On Reject Updates:True to generate a warning when bad records from any update links are rejected.

Warn On Unmatched Masters:True to generate a warning when there are unmatched rows from the master link.

Partitioning:Hash on both master input and update input as shown below:

Partitioning:Hash on both master input and update input as shown below:

Remove a record from the updateds1 and check the output:

Check for the datastage warning in the job log as we have selected Warn on unmatched masters = TRUE

stg_merge,0: Master record (0) has no updates.

stg_merge,1: Update record (1) of data set 1 is dropped; no masters are left.

Scenarios 3:Drop unmatched master record and capture reject records from updateds1

Scenario 4:Insert a duplicate record with same customer id in the master dataset and check for the results.

Scenario 4:Insert a duplicate record with same customer id in the master dataset and check for the results.

Look at the output and it is clear that merge stage automatically dropped the duplicate record from master dataset.

Look at the output and it is clear that merge stage automatically dropped the duplicate record from master dataset.

Scenario 4:Added new updatedataset2 which contains following data.

Scenario 4:Added new updatedataset2 which contains following data.

| Update Dataset2 | |

| CUSTOMER_ID | CITIZENSHIP |

| 1 | INDIAN |

| 2 | AMERICAN |

Still we have duplicate row in the master dataset.if you compile the job with above design you will get compilation error like below.

If you look ate the above figure you can see 2 rows in the output becuase we have a matching row for the customer_id = 2 in the updateds2 .

If you look ate the above figure you can see 2 rows in the output becuase we have a matching row for the customer_id = 2 in the updateds2 .

Scenario 5:add a duplicate row for customer_id=1 in updateds1 dataset.

Scenario 5:add a duplicate row for customer_id=1 in updateds1 dataset.

Now we have duplicate record both in master dataset and updateds1.Run the job and check the results and warnings in the job log.

Now we have duplicate record both in master dataset and updateds1.Run the job and check the results and warnings in the job log.

No change the results and merge stage automatically dropped the duplicate row.

Scenario 6:modify a duplicate row for customer_id=1 in updateds1 dataset with zipcode as 90630 instead of 90620.

Run the job and check output results.

Run the job and check output results.

I ran the same job multiple times and found the merge stage is taking first record coming as input from the updateds1 and dropping the next records with same customer id.

I ran the same job multiple times and found the merge stage is taking first record coming as input from the updateds1 and dropping the next records with same customer id.

This post covered most of the merge scenarios.

Lookup Stage

Lookup Stage:

The Lookup stage is most appropriate when the reference data for all lookup stages in a job is small enough to fit into available physical memory. Each lookup reference requires a contiguous block of shared memory. If the Data Sets are larger than available memory resources, the JOIN or MERGE stage should be used.

Lookup stages do not require data on the input link or reference links to be sorted. Be aware, though, that large in-memory lookup tables will degrade performance because of their paging requirements. Each record of the output data set contains columns from a source record plus columns from all the corresponding lookup records where corresponding source and lookup records have the same value for the lookup key columns. The lookup key columns do not have to have the same names in the primary and the reference links.

The optional reject link carries source records that do not have a corresponding entry in the input lookup tables.

You can also perform a range lookup, which compares the value of a source column to a range of values between two lookup table columns. If the source column value falls within the required range, a row is passed to the output link. Alternatively, you can compare the value of a lookup column to a range of values between two source columns. Range lookups must be based on column values, not constant values. Multiple ranges are supported.

There are some special partitioning considerations for Lookup stages. You need to ensure that the data being looked up in the lookup table is in the same partition as the input data referencing it. One way of doing this is to partition the lookup tables using the Entire method.

Lookup stage Configuration:Equal lookup

You can specify what action need to perform if lookup fails.

Scenario1: Continue

Choose entire partition on the reference link

Choose entire partition on the reference link

Scenario2:Fail

Job aborted with the following error:

Job aborted with the following error:

stg_Lkp,0: Failed a key lookup for record 2 Key Values: CUSTOMER_ID: 3

Scenari03:Drop

If we select reject as lookup failure condition then we need to add reject link otherwise we get compilation error.

If we select reject as lookup failure condition then we need to add reject link otherwise we get compilation error.

Business scenario:we have input data with customer id and customer name and transaction date.We have customer dimension table with customer address information.Customer can have multiple records with different start and active dates and we want to select the record where incoming transcation date falls between start and end date of the customer from dim table.

Ex Input Data:

| CUSTOMER_ID | CUSTOMER_NAME | TRANSACTION_DT |

| 1 | UMA | 2011-03-01 |

| 1 | UMA | 2010-05-01 |

Ex Di Data:

| CUSTOMER_ID | CITY | ZIP_CODE | START_DT | END_DT |

| 1 | BUENA PARK | 90620 | 2010-01-01 | 2010-12-31 |

| 1 | CYPRESS | 90630 | 2011-01-01 | 2011-04-30 |

Expected Output:

| CUSTOMER_ID | CUSTOMER_NAME | TRANSACTION_DT | CITY | ZIP_CODE |

| 1 | UMA | 2011-03-01 | CYPRESS | 90630 |

| 1 | UMA | 2010-05-01 | BUENA PARK | 90620 |

Configure the lookup stage as shown below.Double click on Lnk_input.TRANSACTION_DATE column.(specifying condition on the input link)

You need to specify return multiple rows from the reference link otherwise you will get following warning in the job log.Even though we have two distinct rows base on customer_id,start_dt and end_dt columns but datastage is considering duplicate rows based on customer_id key only.

You need to specify return multiple rows from the reference link otherwise you will get following warning in the job log.Even though we have two distinct rows base on customer_id,start_dt and end_dt columns but datastage is considering duplicate rows based on customer_id key only.

stg_Lkp,0: Ignoring duplicate entry; no further warnings will be issued for this table

Compile and Run the job:

Scenario 2:Specify range on reference link:

Scenario 2:Specify range on reference link:

This concludes lookup stage configuration for different scenarios.

This concludes lookup stage configuration for different scenarios.

Join stage

Join Stage:

The Join stage is a processing stage. It performs join operations on two or more data sets input to the

stage and then outputs the resulting data set. The Join stage is one of three stages that join tables based

on the values of key columns. The other two are:

1)Lookup Stage(https://datastage4u.wordpress.com/2011/04/30/lookup-stage/)

2)Merge Stage(https://datastage4u.wordpress.com/2011/04/30/merge-stage)

The three stages differ mainly in the memory they use, the treatment of rows with unmatched keys, and

their requirements for data being input.

If these take up a large amount of memory relative to the physical RAM memory size of the computer you are running on, then a lookup stage might thrash because the reference datasets might not fit in RAM along with everything else that has to be in RAM. This results in very slow performance since each lookup operation can, and typically

does, cause a page fault and an I/O operation.

So, if the reference datasets are big enough to cause trouble, use a join. A join does a high-speed sort on

the driving and reference datasets. This can involve I/O if the data is big enough, but the I/O is all

highly optimized and sequential. Once the sort is over the join processing is very fast and never involves

paging or other I/O.

In the below sections will show join stage configuration in datastage jobs:

Left Dataset:

| CUSTOMER_ID | CUSTOMER_NAME |

| 1 | UMA |

| 2 | POOJI |

| 3 | KATRU |

Right Dataset or Intermediate Dataset:

| CUSTOMER_ID | CITY | ZIP_CODE |

| 1 | CYPRESS | 90630 |

| 2 | CYPRESS | 90630 |

Scenario 1)

InnerJoin:Inner transfers records from input data sets whose key columns contain equal values to the output

data set. Records whose key columns do not contain equal values are dropped.

Step1)Configure sequential file stage to read both inputs.

Step2)Configure Join stage

In order to specify join key columns both column names should be same from both the inputs.In case of lookup stage this is not required which is also a difference between join and lookup stages.

Click on properties tab in the stage page and select key columns to perform the join.In our case we are using customer_id as key column .

Join stage expects both inputs need to be partitioned and sorted based on join key columns.Go to the partitioning tab of input stage page and select hash partitioning as shown in below figure.

Go to the Advanced tab in stage page and clear the preserve partition flag to avoid warning while writing into sequential file stage.If you are using dataset then you can use propagate becuase dataset is parallel stage it will keep the exact partitions.

Go to the Mapping tab of the output page and select the required columns in the output

Go to the Link Ordering tab in the stage page and check the input link order.

Compile and run the job:

I am adding new column Customer Name in the right link to explain a scenario where non-key column name should be different from both inputs otherwise will get warning from the datastage job.

Compile and run the job and check the job log.We got following warning in the job.

stg_InnerJoin: When checking operator: Dropping component “CUSTOMER_NAME” because of a prior component with the same name.

Note:Non Key column names should be different from both the inputs to the join stage to avoid the above warning.

2)Left Outer Join

Compile and run the job and check results:

If you observe the output you can see all 3 left rows came out from the join stage.

Join stage doesnot have reject link to capture unmatched rows.Add a transformer stage after join stage and check for unmacted rows and write it to a another link.

Join stage doesnot have reject link to capture unmatched rows.Add a transformer stage after join stage and check for unmacted rows and write it to a another link.

when a match does not occur, the Join stage inserts values into the unmatched non-key column(s) using the following rules:

a) If the non-key column is defined as nullable (on the Join input links) then Enterprise Edition

will insert NULL values in the unmatched columns

b) If the non-key column is defined as not-nullable, then Enterprise Edition inserts “default”

values based on the data type. For example, the default value for an Integer is zero, the default

value for a Varchar is an empty string (“”), and the default value for a Char is a string of

padchar characters equal to the length of the Char column.

For this reason, care must be taken to change the column properties to allow NULL values

before the Join. This is most easily done by inserting a Copy stage and mapping a column from

NON-NULLABLE to NULLABLE.

A Transformer stage can be used to test for NULL values in unmatched columns.

In most cases, it is best to use a Column Generator to add an ‘indicator’ column, with a constant value,

to each of the inner links and test that column for the constant after you have performed the join. This

isolates your match/no-match logic from any changes in the metadata. This is also handy with Lookups

that have multiple reference links.

Add a column generator stage and define MATCH_IND column and specify value = ‘Y’.In the transformer stage check If MATCH_IND=’Y’ then matched else unmatched.

Remove Duplicates Stage

Remove Duplicates Stage:

In this article I will explain how to use remove duplicate stage to select unique record from the incoming data based on keys.

Input :

| CUSTOMER_ID | CUSTOMER_NAME | CUSTOMER_CITY | DATE |

| 1 | UMA | BUENA PARK | 20100101 |

| 1 | UMA | CYPRESS | 20110101 |

If you look at above input we have two records with same customer name.

Business Logic:Select a most recent customer record.

we need to remove duplicates from the incoming data based on customer_name and customer_id keys and select most recent record base on the date.

Expected Output:(most recent record based on Date)

| CUSTOMER_ID | CUSTOMER_NAME | CUSTOMER_CITY | DATE |

| 1 | UMA | CYPRESS | 20110101 |

In order to implement the above business logic we need to use Remove Duplicates Stage in our datastage job design.

We are using sequential file stage to read the input data.

Step1) configure sequential file stage

We have defined data as datatype for DATE field and by default datastage uses %yyyy-%mm-%dd as date format string.We need to double click on row 4 in the above screen and configure date format as %yyyy%mm%dd.

Now add remove duplicate stage to the job canvas by drag and drop from palette.Double click on remove dup stage and go to the properties tab on the stage page.

select keys the define the duplicates based on the given business logic.In our case CUSTOMER_ID and CUSTOMER_NAME are keys that defined duplicates in the data.

We need to sort and partition the data on the duplicate keys to make sure ros with same keys should go the same datastage partition node.

We need to sort and partition the data on the duplicate keys to make sure ros with same keys should go the same datastage partition node.

Go to the partition tab in the input page of the rem dup stage.

Partition Type:Hash

Now we need to sort the data on date column(No need to partition) in order to select single record with latest date.

Now we need to sort the data on date column(No need to partition) in order to select single record with latest date.

Please make sure the order of the partition and sort key columns should be same with keys that define the duplicate rows.

Please make sure the order of the partition and sort key columns should be same with keys that define the duplicate rows.

Now go the properties tab in the stage page and select Duplicate To Retain = Last.we configured DATE sorting in ascending that is the reason i have selected Duplicate To Retain = Last as shown in below figure.

Now add sequential file stage as output stage to create a output file and that completes our job design as shown like below.

Now add sequential file stage as output stage to create a output file and that completes our job design as shown like below.

Compile and run the job:

Now look at the director log to see if there are any warnings.

Now look at the director log to see if there are any warnings.

I found following warning in the job log:

I found following warning in the job log:

stg_out: When checking operator: A sequential operator cannot preserve the partitioning

of the parallel data set on input port 0

To remove this warning from the job log go to the Advances tab in the stage page and clear preserver partition flag.

![]() This job finished successfully with out any warnings.

This job finished successfully with out any warnings.

Now i will change the partition and sort order of the columns in the partition tab of the stage page:

I ran the job with the above change and got the following warning the job log:

I ran the job with the above change and got the following warning the job log:

stg_remDup: When checking operator: User inserted sort “stg_remDup.Lnk_Input_remdup_Sort” does not fulfill the sort requirements of the downstream operator “stg_remDup”

So we need to make sure the order of the partition and sort key columns should be same with keys that define the duplicate rows to avoid above warning and incorrect results in the output.

Pivot Enterprise stage

Pivot Enterprise stage:

The Pivot Enterprise stage is a processing stage that pivots data horizontally and vertically.

Pivot Types:

1)Horizontal

2)Vertical

Horizontal pivoting maps a set of columns in an input row to a single column in multiple output rows.

The output data of the horizontal pivot action typically has fewer columns, but more rows than the input

data.

Input Data:

| CUSTOMER_ID | CUSTOMER_NAME | JAN_EXPENSE | FEB_EXPENSE | MAR_EXPENSE |

| 1 | UMA | 100 | 200 | 300 |

| 2 | POOJITHA | 200 | 300 | 400 |

Output Data:

| CUSTOMER_ID | CUSTOMER_NAME | Q1EXPENSE |

| 1 | UMA | 100 |

| 1 | UMA | 200 |

| 1 | UMA | 300 |

| 2 | POOJITHA | 200 |

| 2 | POOJITHA | 300 |

| 2 | POOJITHA | 400 |

Specify a horizontal operation in Pivot enterprise stage.

Create basic datastage job using sequential file as input stage to read input data,Pivot Enterprise stage and sequential file stage as output to create output data.

Basic Job Design:

Configure sequential file stage as shown below to read input data(comma delimited input file).

Configure sequential file stage as shown below to read input data(comma delimited input file).

Step2)Configure pivot enterprise stage.Double click on pivot enterprise stage and configure shown like below.

Step2)Configure pivot enterprise stage.Double click on pivot enterprise stage and configure shown like below.

*Go to the properties page and select Pivot Type = Horizontal

Click on Pivot Properties tab on stage properties page and follow following steps to configure for Horizontal pivot:

Click on Pivot Properties tab on stage properties page and follow following steps to configure for Horizontal pivot:

You can generate a pivot index that will assign an index number to each row within sets of horizontally

pivoted data:

Click on the above highlighted red circle.

Click on the above highlighted red circle.

Now Click on the Mapping tab of the output page:

Now Click on the Mapping tab of the output page:

Now configure Output sequential file stage to create a delimited output file as shown below.

Now configure Output sequential file stage to create a delimited output file as shown below.

Vertical pivoting maps a set of rows in the input data to single or multiple output columns. The array

size determines the number of rows in the output data. The output data of the vertical pivot action

typically has more columns, but fewer rows than the input data.

Input Data:

| CUSTOMER_ID | CUSTOMER_NAME | Q1EXPENSE |

| 1 | UMA | 100 |

| 1 | UMA | 200 |

| 1 | UMA | 300 |

| 2 | POOJITHA | 200 |

| 2 | POOJITHA | 300 |

| 2 | POOJITHA | 400 |

Output Data:

| CUSTOMER_ID | CUSTOMER_NAME | JAN_EXPENSE | FEB_EXPENSE | MAR_EXPENSE | Q1AVERAGE |

| 1 | UMA | 100 | 200 | 300 | 200 |

| 2 | POOJITHA | 200 | 300 | 400 | 300 |

Now open Pivot enterprise stage and go the properties tab on stage page:

Go to pivotproperties tab on the stage page and click load button to load metadata:

Now specify array size as 3 because we are have 3 months expenses in the quarter expense of in the input data and we need to convert into 1 row.

Now specify array size as 3 because we are have 3 months expenses in the quarter expense of in the input data and we need to convert into 1 row.

Designing Datastage Jobs for Good Perfomance

Performance Points:

1) Avoid unnecessary type conversions: set the OSH_PRINT_SCHEMAS environment variable to verify that run time schemas match the job design column definitions. If you are using stage variables on a Transformer stage, ensure that their data types match the expected result types.

2) Use Transformer stages sparingly and wisely. Transformer stages can slow down your job. Do not have multiple stages where the functionality could be incorporated into a single stage, and use other stage types to perform simple transformation operations

3) Increase Sort performance where possible. Careful job design can improve the performance of sort operations, both in standalone Sort stages and in on-link sorts specified in the Inputs page Partitioning tab of otherstage types.

4) Remove Unneeded Columns. Remove unneeded columns as early as possible within the job flow. Every additional unused column requires additional buffer memory, which can impact performance and make each row transfer from one stage to the next more expensive. If possible, when reading from databases, use a select list to read just the columns required, rather than the entire table.

5) Avoid reading from sequential files using the Same partitioning method. Unless you have specified more than one source file, this will result in the entire file being read into a single partition, making the entiredownstream flow run sequentially unless you explicitly repartition

The environment variable APT_STRING_PADCHAR can be used to change the default pad character from an ASCII NULL (0x0) to another character; for example, an ASCII space (Ox20) .

6)It is important to note that the individual SQL statements required by a Sparse Lookup are an expensive operation from a performance perspective. In most cases, it is faster to use a DataStage Join stage between the input and DB2 reference data than it is to perform a Sparse Lookup.

7) For scenarios where the number of input rows is significantly smaller (1:100 or more) than the number of reference rows in a DB2 or Oracle table, a Sparse Lookup may be appropriate. CPU-intensive applications, which typically perform multiple CPUdemanding operations on each record, benefit from the greatest possible parallelism, up to the capacity supported by your system.

8)Parallel jobs with large memory requirements can benefit from parallelism if they act on data that has been partitioned and if the required memory is also divided among partitions.

9)Applications that are disk- or I/O-intensive, such as those that extract data from and load data into RDBMSs, benefit from configurations in which the number of logical nodes equals the number of disk spindles being accessed. For example, if a table is fragmented 16 ways inside a database or if a data set is spread across 16 disk drives, set up a node pool consisting of 16 processing nodes.

10)For some jobs, especially those that are disk-intensive, you must sometimes configure your system to prevent the RDBMS from having either to redistribute load data or to re-partition the data from an extract operation.

11) The speed of communication among stages should be optimized by your configuration. For example, jobs whose stages exchange large amounts of data should be assigned to nodes where stages communicate by either shared memory (in an SMP environment) or a high-speed link (in an MPP environment). The relative placement of jobs whose stages share small amounts of data is less important.

12)Turn off Runtime Column propagation wherever it’s not required.

13) If you are using a copy or a filter stage either immediately after or immediately before a transformer stage, you are reducing the efficiency by using more stages because a transformer does the job of both copy stage as well as a filter stage

14)If you have a single input and single output when you are using a copy stage then set the force option to tru so that DataStage should not try to optimize the job by removing the Copy operation.